This blog post provides AWS Certified Security Specialty Exam Questions and guides you on AWS security concepts such as designing and implementing security solutions on AWS. A large number of organizations have been implementing AWS-based applications, and the security risks are increasing parallelly as well. And this is where the AWS Security specialists come into the picture.

To learn more about this role and AWS Certified Security Specialty certification exam, we are providing you with free questions along with detailed answers in the next section. This helps you in understanding the certification and fastening your preparation for this SCS-C02 exam.

Also, note that the exam syllabus covers questions from the following domains:

Let’s get started!

Table of Contents

A. You can enable or disable the automatic key rotation in the AWS console or CLI. The key rotation frequency is 1 year

B. AWS manages the key rotation, and the user cannot disable it. The key is rotated every 1 year

C. You can enable or disable the automatic key rotation in the AWS console or CLI. The key rotation frequency can also be configured as 1 month, 1 year or 3 years

D. The key rotation is managed by AWS. The key is automatically rotated every three years

Correct Answer: D

Explanation

AWS rotates the key material for the managed KMS keys:

The KMS key in this question is “AWS Managed Key aws/s3”

Option A is incorrect because users cannot disable the key rotation for AWS-managed keys. Instead, users can configure the key rotation for customer-managed keys.

Option B is incorrect because the frequency of AWS managing key rotation is 3 years and not 1 year.

Option C is incorrect because users cannot configure the key rotation for AWS-managed keys.

Option D is CORRECT because AWS-managed keys users cannot manage the key rotation. And the key is automatically rotated every 3 years (1095 days).

For more information on AWS KMS key rotation, kindly refer to the URL provided below: https://docs.aws.amazon.com/kms/latest/developerguide/rotate-keys.html

A. Use “aws kms encrypt” to encrypt the file. No envelope encryption is required in this case

B. Use “aws kms generate-data-key” to generate a data key, then use the plain text data key to encrypt the file

C. Use “aws kms generate-data-key” to generate a data key, then use the encrypted data key to encrypt the file

D. Envelope encryption is required in this case. Use “aws kms encrypt” to generate a data key, then use the plain text data key to encrypt the file

Correct Answer: B

Explanation

Option A is incorrect because “aws kms encrypt” meets the requirement since the maximum size of the data it supports is greater than 4K. So envelope encryption must be used.

Option B is CORRECT because since the file size is larger than 4K, envelope encryption must be used. And for envelope encryption, the plain text data key is used.

Option C is incorrect because, for envelope encryption, the plain text data key is used.

Option D is incorrect because the “aws kms encrypt” command is not used to generate the data key.

A. The CMK key ID is needed for “aws kms decrypt”

B. The CMK key ARN is needed for “aws kms decrypt”

C. The encrypted data key is needed for “aws kms decrypt”

D. There is no need to add the CMK to decrypt in the command

Correct Answer: D

Explanation

The parameter –key-id is required only when the ciphertext was encrypted under an asymmetric KMS key. If you use the symmetric KMS key, KMS can get the KMS key from metadata that it adds to the symmetric ciphertext blob.

The decryption can be done by the following CLI:

aws kms decrypt \

–ciphertext-blob fileb://ExampleEncryptedFile \

–output text \

–query Plaintext | base64 –decode > ExamplePlaintextFile

Options A and B are incorrect : no encryption key information is required when decrypting with symmetric CMKs.

Option C is incorrect : Because the encrypted data key is not required for encryption or decryption.

Option D is CORRECT : The –key-id parameter is not required when decrypting with symmetric CMKs. AWS KMS can get the CMK that was used to encrypt the data from the metadata in the ciphertext blob.

Check the below links for how to use KMS encrypt/decrypt.

A. The secret access key and access key token have expired for the Jenkins EC2 IAM role

B. The key policy of the CMK was added with a ViaService condition for EC2 service

C. The key policy of the CMK was recently modified with the addition of a deny for the IAM role that Jenkins EC2 is using

D. An SCP policy was added in the Organization which allows kms:encryption operation for EC2 resources

Correct Answer: C

Explanation

Users should check if the IAM role can use the CMK in both the IAM policy and key policy. At least there should be an allow in either policy, and there should not be any explicit deny.

Option A is incorrect because an IAM role doesn’t have a secret access key.

Option B is incorrect because the ViaService condition for EC2 service would allow the key usage for EC2 instances so that it cannot be the cause of the issue.

Option C is CORRECT : An explicit deny will disallow the key to be used by the Jenkins server. That may be the cause of the failure.

Option D is incorrect because the kms:encryption allows in-service control policy (SCP) cannot result in this highlighed failure.

A. The IAM user in account B does not have IAM permission to get an object in the particular S3 bucket

B. The Resource in bucket policy should include “arn:aws:s3. AccountABucketName”

C. The Action in bucket policy should add the action of “s3:GetObjectACL”

D. The Principal in bucket policy should add a cross-account IAM role assumed by the IAM user in account B

Correct Answer: A

Explanation

To provide cross-account S3 permission, there are different approaches by IAM users, assuming a cross-account role, or bucket ACL.

For this specific case, it uses an IAM user. The IAM user must be granted the S3 permissions through an IAM policy . And the bucket owner must also grant permissions to the IAM user through a bucket policy . For details, please check the first resolution in the provided link below.

Option A is CORRECT because to provide the access, you need two things: S3 bucket policy on account A for account B IAM user to access and then in Account B, the IAM user needs to have an allow access for the S3 bucket in the IAM policy (This is missing).

Option B is incorrect because the resource “arn:aws:s3. AccountABucketName” is unnecessary and would not provide the necessary permission.

Option C is incorrect because “s3:GetObjectACL” is not required. This case only needs “s3:GetObject”.

Option D is incorrect because there is no mention of an IAM user in account B to assume a cross-account role in this particular scenario. Instead, as long as the user is given an “s3:GetObject” permission to the S3 bucket, it should be able to get objects in the bucket.

For more information on cross-account S3 bucket object access, do refer to the URL provided below https://aws.amazon.com/premiumsupport/knowledge-center/cross-account-access-s3/

A. Conduct an audit once EC2 autoscaling happens

B. Conduct an audit if you’ve added or removed software in your accounts

C. Conduct an audit if you ever suspect that an unauthorized person might have accessed your account

D. When there are changes in your organization

Correct Answer: A

Explanation

Option A is CORRECT because it doesn’t meet the AWS security audit guidelines.

Options B, C and D are incorrect as they are the recommended best practices by AWS.

According to the AWS documentation, you should audit your security configuration in the following situations:

For more information on Security Audit guidelines, please visit the below URL: https://docs.aws.amazon.com/general/latest/gr/aws-security-audit-guide.html

A. AWS VPN

B. AWS VPC Peering

C. AWS NAT gateways

D. AWS Direct Connect

Correct Answers: A and D

Explanation

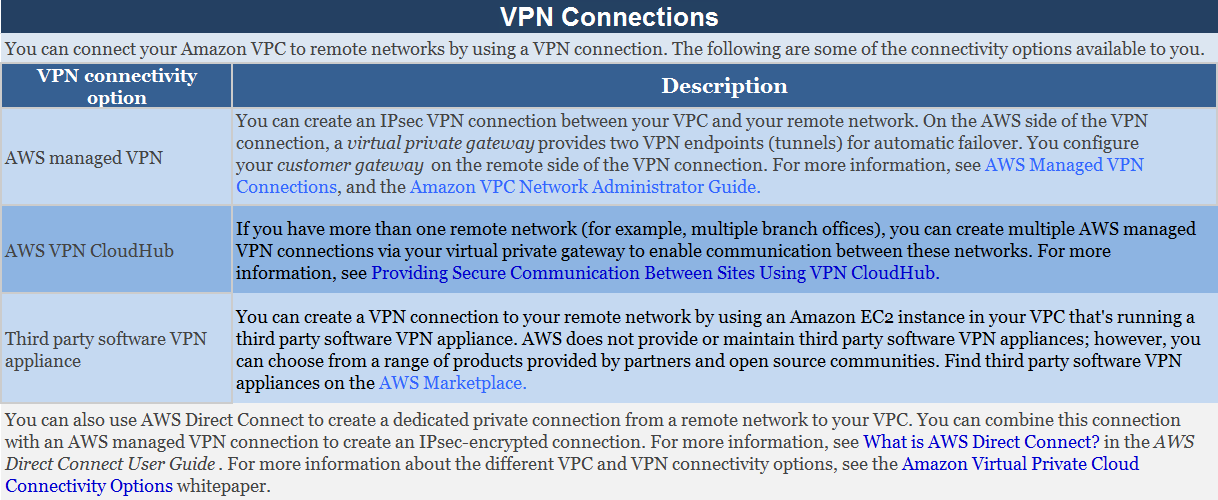

The AWS Documentation mentions the following which supports the above requirements.

With AWS Direct Connect + VPN, you can combine AWS Direct Connect dedicated network connections with the Amazon VPC VPN. AWS Direct Connect public VIF establishes a dedicated network connection between your network to public AWS resources, such as an Amazon virtual private gateway IPsec endpoint. Please note that you need to use public VIF for VPN encryption.

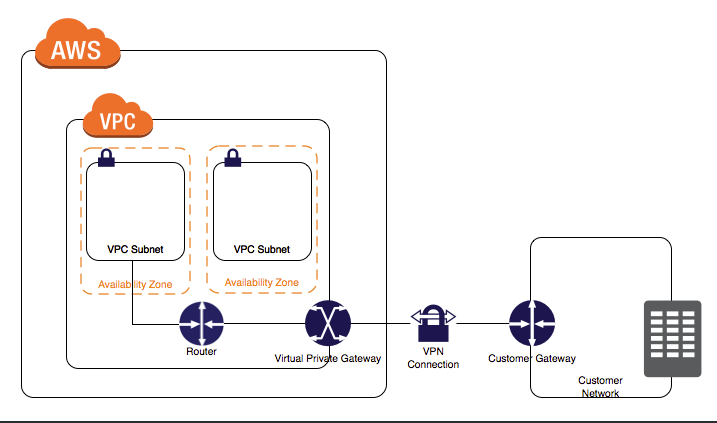

Here is the diagram:

Option B is incorrect because VPC peering is only used for connection between VPCs and cannot be used to connect On-premises infrastructure to the AWS Cloud.

Option C is incorrect because NAT gateways are used to connect instances in a private subnet to the Internet.

A. Use an IAM policy that references the LDAP account identifiers and the AWS credentials

B. Use SAML (Security Assertion Markup Language) to enable single sign-on between AWS and LDAP

C. Use AWS Security Token Service (AWS STS) to issue long-lived AWS credentials

D. Use IAM roles to rotate the IAM credentials when LDAP credentials are updated automatically

Correct Answer: B

Explanation

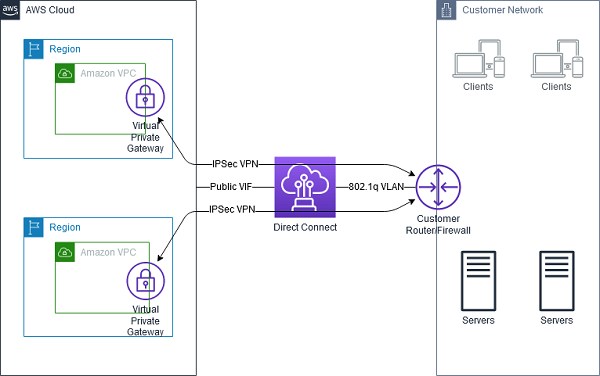

You can use SAML to provide your users with federated single sign-on (SSO) to the AWS Management Console or federated access to call AWS API operations.

Essentially, on the AWS side, you need to create an Identity Provider (IdP) that represents on-prem LDAP, then create a SAML role that trusts the IdP.

Options A, C, and D are all incorrect because all of these options cannot help you to enable single sign-on.

A. <

“Version”:”2012-10-17″,

“Statement”:[

<

“Sid”:”DataGovernancePolicy1″,

“Effect”:”Deny”,

“Action”:[

“s3:CreateBucket”

],

“Resource”:[

“arn:aws:s3. *”

],

“Condition”: <

“StringNotEquals”: <

“s3:LocationConstraint”: “eu-west-2”

>

>

>,

<

“Sid”:”DataGovernancePolicy2″,

“Effect”:”Allow”,

“Action”:[

“s3:CreateBucket”

],

“Resource”:[

“arn:aws:s3. *”

],

“Condition”: <

“StringEquals”: <

“s3:LocationConstraint”: “eu-west-2”

>

>

>

]

>

—————————————————————————-

B. <

“Version”:”2012-10-17″,

“Statement”:[

<

“Sid”:”DataGovernancePolicy1″,

“Effect”:”Deny”,

“Action”:[

“s3:CreateBucket”

],

“Resource”:[

“arn:aws:s3. *”

],

“Condition”: <

“StringLike”: <

“s3:LocationConstraint”: “eu-west-2”

>

>

>,

<

“Sid”:”DataGovernancePolicy2″,

“Effect”:”Allow”,

“Action”:[

“s3:CreateBucket”

],

“Resource”:[

“arn:aws:s3. *”

],

“Condition”: <

“StringNotEquals”: <

“s3:LocationConstraint”: “eu-west-2”

>

>

>

]

>

—————————————————————————-

Correct Answer: A

Explanation

Option A is CORRECT because the statement “DataGovernancePolicy1” denies creating all S3 buckets in non-eu-west-2 regions, and the statement “DataGovernancePolicy2” allows creating S3 buckets in region eu-west-2.

Option B is incorrect because it does the opposite: “DataGovernancePolicy1” denies creating buckets in region EU-west-2, and the statement “DataGovernancePolicy2” allows creating buckets in regions other than EU-west-2. Please double-check the condition operators in SCP.

Option C is incorrect because s3:x-amz-region is not a valid condition key.

Option D is incorrect because s3:x-amz-region is not a valid condition key.

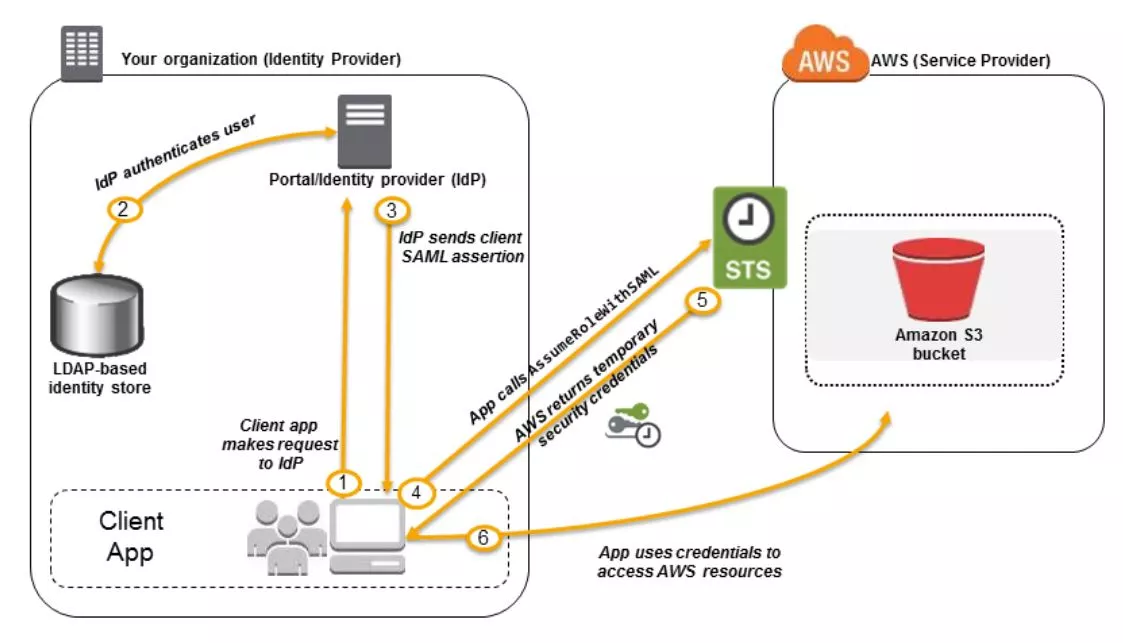

A. All S3 data is encrypted by default

B. Use AWS SSE-S3

C. Enable AWS-KMS encryption and specify aws/s3 (AWS KMS-managed CMK) as the key for the Client-Side Encryption

D. Use Custom AWS KMS customer master key (CMK)

Correct Answers: B and D

Explanation

Option A is incorrect because S3 bucket encryption is not encrypted by default. You need to use AWS SSE-S3 or KMS for its encryption.

Option B is CORRECT because encryption on S3 bucket objects can be completed using Server Side Encryption SSE-S3 with AES-256(Encryption type).

Option C is incorrect because Server Side Encryption should be used instead of Client-Side Encryption.

Option D is CORRECT because the custom AWS KMS customer master key (CMK) provides encryption of S3 bucket objects and also allows managing the key policy and its rotation to the customer and satisfies the expectation as per the ask.

References:

For more information on AWS S3 Encryption options, refer to the URL provided below https://docs.aws.amazon.com/AmazonS3/latest/dev/bucket-encryption.html

For information on Custom AWS KMS Customer Master Key (CMK) and AWS Managed CMK, refer to the URL below: https://aws.amazon.com/premiumsupport/knowledge-center/s3-object-encrpytion-keys/

A. Check the Inbound security rules for the database security group. Check the Outbound security rules for the application security group

B. Check the Outbound security rules for the database security group. Check the Inbound security rules for the application security group

C. Check both the Inbound and Outbound security rules for the database security group. Check the Inbound security rules for the application security group

D. Check both Outbound security rules for the database security group. Check both the Inbound and Outbound security rules for the application security group

Correct Answer: A

Explanation

In this case, the application server initializes the communication with the database server, we need to check whether the traffic can go out from the application server, hence we check the outbound rule in the application server security group.

For the database server to accept traffic from the application server, it needs an inbound rule to allow it, hence we need to check the inbound rules in its security group.

AWS security group is stateful, which means the returning traffic to the allowed inbound traffic is allowed regardless of the outbound rules. In general, we just need to check the rules along the initial traffic direction. That’s why we don’t need to check the inbound rule in the application server security group and the outbound rules in the database application security group.

Option B is incorrect because it says that we need to check the outbound security group for the database, which is unnecessary.

Option C is incorrect because you do not need to check for the Outbound security rules for the database security group.

Option D is incorrect because you do not need to check for Inbound security rules for the application security group.

A. Enable S3 Server-side encryption on the metadata of each object

B. Put the metadata as object in the S3 bucket and then enable S3 Server side encryption

C. Put the metadata in a DynamoDB table and ensure the table is encrypted during creation time

D. Put the metadata in the S3 bucket itself

Correct Answer: C

Explanation

S3 object metadata is not encrypted.

Option A is incorrect because that’s not possible. There is object encryption key information in the metadata. If metadata itself is encrypted, S3 have to figure out the encryption for each metadata.

Options B is incorrect because when you put metadata as object in the S3 bucket, you actually creates the metadata of a metadata, which is still not encrypted

Option C is CORRECT because when the S3 bucket objects are encrypted, the metadata is not encrypted. So the best option is to store the metadata in the DynamoDB table and encrypt using AWS KMS during the table creation process.

Option D is incorrect because S3 object metadata is not encrypted.

For more information on using KMS encryption for S3, please refer to the below URL: https://docs.aws.amazon.com/AmazonS3/latest/dev/UsingKMSEncryption.html

A. AWS CloudFront

B. AWS Lambda

C. AWS Application Load Balancer

D. AWS Classic Load Balancer

Correct Answers: A and C

Explanation

AWS WAF is a web application firewall that helps protect your web applications or APIs against common web exploits and bots that may affect availability, compromise security, or consume excessive resources.

Option A is CORRECT because AWS WAF can be deployed on Amazon CloudFront. As part of Amazon CloudFront, it can be part of your Content Distribution Network (CDN), protecting your resources and content at the Edge locations.

Options B is incorrect because AWS WAF doesn’t protect Lambda directly, it can protect API Gateway instead.

Option C is CORRECT because AWS WAF can be deployed on Application Load Balancer (ALB). As a part of the Application Load Balancer, it can protect your origin web servers running behind the ALBs.

Option D is incorrect because AWS WAF can protect Application Load Balancer but not a Classic load balancer.

For more information on the web application firewall, kindly refer to the below URLs: https://aws.amazon.com/waf/

A. Fully End-to-end protection of data in transit

B. Fully End-to-end Identity authentication

C. Data encryption across the Internet

D. Protection of data in transit over the Internet

E. Peer identity authentication between VPN gateway and customer gateway

F. Data integrity protection across the Internet

Correct Answers: C, D, E and F

Explanation

First of all, The end-to-end means on-prem application endpoint to the service endpoint in AWS cloud, not VPN gateway and customer gateway.

AWS VPN connection refers to the connection between your VPC and your own on-premises network. Site-to-Site VPN supports Internet Protocol security (IPsec) VPN connections.

As per the above diagram, the IPSec VPN Tunnel is a connection established between a Virtual Private Gateway and a Customer Gateway. This means that it is NOT established between the actual endpoints. So, the encryption between the gateway endpoints and the server or service endpoints may not be encrypted.

Option C is correct. Data that is transmitted through the IPSec tunnel is encrypted.

Option D is correct as it protects data in transit over the internet.

Option E is correct. Peer identity authentication between VPN gateway and customer gateway is required for implementing VPN IPSec tunnel.

Option F is correct . The integrity of data transmitted over the internet is also possible via IPSec tunnel.

Options A and B are incorrect because there is no complete guarantee of fully end-to-end date protection or identity authentication using IPSec.

A. Create the certificate in Amazon KMS and upload it to the ELB

B. Store the certificate and private key in the ELB through the ECS service

C. Use the OpenSSL command to generate a certificate, upload it to IAM and configure ELB to use the certificate

D. Request a certificate in ACM and configure the Application Load Balancer to use the certificate

E. Use Amazon Fargate as the container compute engine. It offers native TLS security in the Application Load Balancer

Correct Answers: C and D

Explanation

Essentially, this question asked for the metholds to create and set up certificate for ALB.

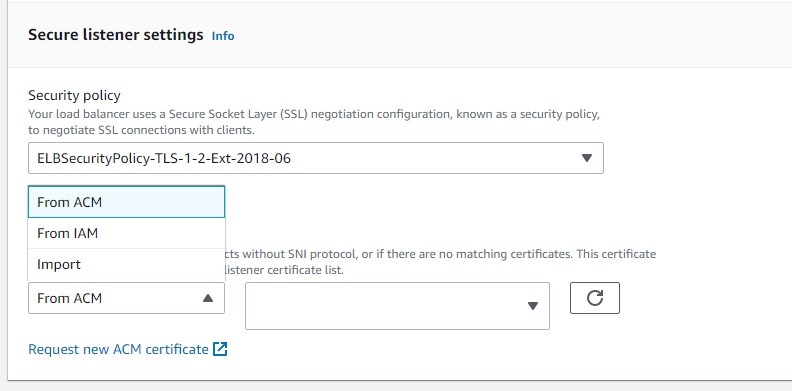

Based on the above screenshot, there are 3 different ways to configure a certificate on ALB:

Note: Use IAM as a certificate manager only when you support HTTPS connections in a region that is not supported by ACM.

Option A is incorrect because KMS is used for the storage and management of data encryption keys and would not assist in creating a certificate in ELB.

Option B is incorrect because the certificate of ELB is not configured through the ECS service.

Option C is CORRECT because you can use OpenSSL to generate certificates and upload the certificates to IAM/ACM for ELB.

Option D is CORRECT because AWS Certificate Manager (ACM) can be used for creating and managing public SSL/TLS certificates.

Option E is incorrect because Amazon Fargate does not provide support for such functionality.

A. Change your AWS account root user password

B. Delete all AWS EC2 resources even if you are unsure if they are compromised or not

C. Rotate access keys if they were authorized and are still needed, otherwise delete them

D. Respond to any notifications you received from AWS Support

E. Enable AWS Shield Advanced to protect the EC2 resources from DDoS attacks

Correct Answers: A, C and D

Explanation

Option A is CORRECT because the AWS root account has complete access to AWS resources, services, and billing. Changing your AWS account root user password is necessary to protect your AWS account from being compromised.

Option B is incorrect because it is not suitable to delete all resources at this stage. Instead, you should delete any resources on your account you didn’t create, such as EC2, EBS, IAM resources, etc.

Option C is CORRECT because

Option D is CORRECT because you should sign in to the AWS Support Center, check the notification detail, and respond to it.

Option E is incorrect because protecting from DDoS attacks is not urgent when the AWS account is compromised. The account may be compromised by many other services such as IAM and S3 and not just by EC2 instances.

A. Enable VPC flow logs to know the source IP addresses

B. Monitor the S3 API calls by enabling S3 data event for all buckets in CloudTrail

C. Monitor the S3 API calls by using CloudWatch logging

D. Monitor the S3 API calls by S3 Inventory Configuration

Correct Answer: B

Explanation

The AWS Documentation mentions the following.

Amazon S3 is integrated with AWS CloudTrail. Once the S3 data event is enabled for the buckets, CloudTrail captures specific API calls made to Amazon S3 from your AWS account and delivers the log files to an Amazon S3 bucket that you specify. It captures API calls made from the Amazon S3 console or from the Amazon S3 API.

Using the information collected by CloudTrail, you can determine what request was made to Amazon S3, the source IP address from which the request was made, who made the request, when it was made, and so on.

Options A is incorrect because, with the VPC flow log, you can’t know who makes the API call.

Option C is incorrect because these services cannot be used to get the source IP address of the calls to S3 buckets.

Option D is incorrect because S3 Inventory helps you understand your storage usage on S3, but not API calls.

A. The EC2 security group and the ACL in the EC2 subnet allow the inbound traffic. The security group denies the outbound traffic

B. The EC2 security group and the ACL in the EC2 subnet allow the inbound traffic. The ACL denies the outbound traffic

C. The ACL in the EC2 subnet denies the inbound traffic. The EC2 security group allows the inbound traffic

D. The EC2 security group denies the inbound traffic. The ACL in the EC2 subnet allows both inbound and outbound traffic

Correct Answer: B

Explanation

Please note that your computer has IP address ‘203.0.113.12’, it’s not in the same VPC as the EC2 instance ‘172.31.16.140’.

Option A is incorrect : Because the security group is stateful. As it allows the incoming traffic, the outbound traffic is automatically allowed.

Option B is CORRECT : This ensures that the inbound traffic is accepted. For the outbound traffic, as the ACL denies it, the message will be rejected. The configurations align with the flow logs.

Option C is incorrect : In this scenario, the incoming ping message is accepted. So the ACL rule for the inbound traffic should allow it.

Option D is incorrect : The inbound rule in the security group should allow the traffic since there is an ACCEPT for the incoming message.

A. Configure S3 Bucket Lifecycle Policy

B. Configure S3 Bucket Versioning

C. Configure S3 Bucket Event Notification

D. Configure Cross-Region Replication

E. Configure Same-Region Replication

F. Configure an AWS Lambda function to replicate S3 objects

Correct Answers: B and E

Explanation

Option A is incorrect because S3 lifecycle policies allow you to automatically review objects within your S3 Buckets and have them moved to Glacier or have the objects deleted from S3. They are not responsible for data replication between AWS accounts.

Option B is CORRECT because S3 bucket versioning allows creating a version of objects or data stored in S3. This is one of the requirements of S3 replication. Please check the reference link.

Option C is incorrect because the Amazon S3 event notification feature enables you to receive notifications when certain events happen in your bucket. This does not provide a solution to replicate data from the production account to the test account.

Option D is incorrect because Cross Region replication allows S3 data to be copied from one AWS region to another. Since the ask is to keep the data in the London region, we cannot be using this option.

Option E is CORRECT because S3 Same-Region Replication can be configured on an S3 bucket to replicate objects to another bucket in the same region automatically.

Option F is incorrect because implementing a Lambda function to replicate S3 objects to another bucket is not the optimal solution as it requires creating and managing custom code. Also for very large objects, we need to consider the limit on the Lambda timeout setting, so we need split large objects, that’s a lot of work.

A. S3 buckets must enable server-side encryption with SSE-KMS

B. Bucket policy on the destination bucket must allow the source bucket owner to store the replicas

C. If the source bucket owner is not the object owner, the object owner must grant the bucket owner READ and READ_ACP permissions with the object access control list

D. S3 Bucket Lifecycle Policy must be configured

E. S3 Bucket Event Notifications must be configured

Correct Answers: B and C

Explanation

Option A is incorrect because server-side encryption with SSE-KMS is not a requirement. Please find the S3 replication requirements in the referenced links.

Option B is CORRECT because the source bucket owner must have permission to replicate objects on the destination S3 bucket for replication to succeed.

Option C is CORRECT because the source bucket owner must have access permissions to objects being replicated for replication to succeed. It is possible that IAM users other than the S3 bucket owner have permission to put objects in the source bucket. In that scenario, the object owner must grant access permissions on the objects to the bucket owner.

Option D is incorrect because S3 Lifecycle policies allow you to automatically review objects within your S3 Buckets and have them moved to Glacier or have the objects deleted from S3, but they are not responsible for data replication in S3.

Option E is incorrect because the Amazon S3 event notification feature enables you to receive notifications when certain events happen in your bucket. This does not provide a solution to cross-region replication.

A. Create an Origin Access Identity

B. Create CloudFront signed URLs

C. Create IAM Resource policy granting CloudFront access

D. Create CloudFront signed cookies

E. Create CloudFront Service Endpoint

Correct Answers: B and D

Explanation

Option A is incorrect because origin access identity is used when content from Amazon S3 can only be served through CloudFront. We can’t deliver OAI to the customers for distribution, it is used on Cloud Front.

Option B is CORRECT because CloudFront signed URLs allow you to control who can access your content.

Option C is incorrect because IAM resource policy is not required in this scenario.

Option D is CORRECT because CloudFront signed URLs and signed cookies provide the same basic functionality: they allow you to control who can access your content.

Option E is incorrect because CloudFront does not have a service endpoint.

A. Create a shell script to use AWS CLI acm-pca list-certificates to get the required certificate information for this particular private CA

B. In the AWS ACM console, you can easily get the certificates’ details for each private Certificate Authority. Make sure the IAM user has the list-certificates permissions

C. Edit a Python script to use Boto3 to retrieve the certificate details including the subject name, expiration date, etc

D. Create an audit report to list all of the certificates that the private CA has issued or revoked. Download the JSON-formatted report from the S3 bucket

Correct Answer: D

Explanation

Users can create an audit report for a private CA. The report is saved in an S3 bucket and contains the required information. The reference is in

https://docs.aws.amazon.com/acm-pca/latest/userguide/PcaAuditReport.html .

Option A is incorrect : Because there is no list-certificates CLI for acm-pca. Check https://docs.aws.amazon.com/cli/latest/reference/acm-pca/index.html#cli-aws-acm-pca .

Option B is incorrect : Because there is no IAM permission for list-certificates. And you cannot easily get all the certificate details from the AWS console.

Option C is incorrect : This option may work. However, it is not as straightforward as option D. You have to maintain the Python script and use AWS SDK.

Option D is CORRECT : The audit report is the easiest way. The report contains the required details of CA issued or revoked certificates. Take the below as an example:

<

“awsAccountId”: “123456789012”,

“certificateArn”: “arn:aws:acm-pca:region:account:certificate-authority/CA_ID/certificate/e8cbd2bedb122329f97706bcfec990f8”,

“serial”: “e8:cb:d2:be:db:12:23:29:f9:77:06:bc:fe:c9:90:f8”,

“subject”: “1.2.840.113549.1.9.1=#161173616c6573406578616d706c652e636f6d,CN=www.example1.com,OU=Sales,O=Example Company,L=Seattle,ST=Washington,C=US”,

“notBefore”: “2018-02-26T18:39:57+0000”,

“notAfter”: “2019-02-26T19:39:57+0000”,

“issuedAt”: “2018-02-26T19:39:58+0000”,

“revokedAt”: “2018-02-26T20:00:36+0000”,

“revocationReason”: “KEY_COMPROMISE”

>

A. Enable AWS Shield for cost protection that allows users to request a refund of scaling related costs that result from a DDoS attack

B. Configure Amazon CloudFront to distribute traffic to the application. Ensure that only the Amazon CloudFront distribution can forward requests to the origin

C. Configure AWS Firewall Manager to centrally configure and manage AWS WAF rules across the AWS Organization Create Firewall Manager policies using the AWS Organization master account

D. Collect VPC Flow Logs to identify network anomalies and DDoS attack vectors. Set up CloudWatch alarms based on the key operational CloudWatch Metrics such as CPUUtilization

Correct Answer: B

Explanation

The AWS best practices for DDoS resiliency can be found in the white paper https://d1.awsstatic.com/whitepapers/Security/DDoS_White_Paper.pdf

Option A is incorrect : Cost protection is one feature of AWS Shield Advanced instead of normal AWS Shield. Users can claim a limited refund if servers scale up/down due to DDoS attacks for AWS Shield Advanced. Besides, this option is not an approach to reduce the attack surface.

Option B is CORRECT : This option improves the origin’s security as malicious users cannot bypass the Amazon CloudFront when accessing the web application. The attack surface is reduced.

Option C is incorrect : AWS Firewall Manager is a central management tool. This method does not reduce the attack surface.

Option D is incorrect : The methods in option D help to gain visibility into abnormal behaviors. However, the attack surface is not reduced.

A. AWS provides a daily credential report to the security contact email of the AWS account.

B. In AWS Trusted Advisor, use the Exposed Access Keys check to identify leaked credentials, set up CloudWatch Event rule target to a Lambda function for remediation.

C. Create a Lambda function using the Exposed Access Keys blueprint to monitor the IAM credentials and notify an SNS topic.

D. Use an open-source tool to scan popular code repositories for access keys that have been exposed to the public. Configure an SQS queue to receive the security alerts.

Correct Answer: B

Explanation

Option A is incorrect because AWS does not provide a daily credential report about our AWS Infrastructure and services alerts.

Option B is CORRECT because the Exposed Access Keys check-in AWS Trusted Advisor can identify potentially leaked or compromised access keys. Setup a CloudWatch event rule to catch this event and trigger the Lambda function for remediation.

Option C is incorrect because you do not need to maintain a Lambda function for this, and there is no Exposed Access Keys blueprint available.

Option D is incorrect because AWS SQS is a fully managed message queuing service that enables you to decouple and scale microservices, distributed systems, and serverless applications, but this would not provide alerts in case of access keys being exposed.

A. Configure AWS Config rules using Lambda functions. Whenever config rules become non-compliant, the Lambda functions send notifications to an SNS topic

B. Integrate AWS Config rules with an Amazon Kinesis stream to perform real-time analysis and notifications

C. Set remediation action to Systems Manager Automation “AWS-PublishSNSNotification”, add an SNS topic as the target to provide notifications

D. Configure each AWS Config rule with a CloudWatch alarm. Trigger the alarm if the rule becomes non-compliant

Correct Answer: C

Explanation

Option A is incorrect because creating, maintaining, and managing several config rules and integrating with lambda would not be an efficient solution for all the options.

Option B is incorrect because CloudWatch Events can deliver a near real-time stream of system events that describe changes in AWS resources, including AWS Config rules, and using Kinesis would not be the most efficient and cost-effective solution to provide the notification.

Option C is CORRECT because users can configure remediation action with Systems Manager Automation “AWS-PublishSNSNotification” to send notifications through an SNS topic. AWS Systems Manager Automation provides predefined runbooks for Amazon Simple Notification Service.

Option D is incorrect because AWS Config rules do not integrate with CloudWatch alarms to notify configuration changes.

Hope you understood what is expected in the AWS Security Specialty certification exam and how to prepare for it. So ensure that you go through these AWS questions and the detailed answers to understand which domain the questions belong to and get yourself familiarised. Keep Learning !

Dharmendra Digari carries years of experience as a product manager. He pursued his MBA, which honed his skills of seeing products differently than others perceive. He specialises in products from the information technology and services domain, with a proven history of expertise. His skills include AWS, Google Cloud Platform, Customer Relationship Management, IT Business Analysis and Customer Service Operations. He has specifically helped many companies in the e-commerce domain establish themselves with refined and well-developed products, carving a niche for themselves.